Photo by Andrew Neel on Unsplash

The overview of system design

This post is a digest of reading The complete guide to System Design in 2023

What is system design?

System design is a phase to define the architecture, interfaces and data processing of a system that can best satisfy the specified requirements which cannot be resolved by a single component. So, when doing system design, we will use many kinds of technicals and combine or connect different components together.

Why is system design important for a software engineer?

After two decades of fast development, the internet population increased a lot, and the requirements from the users as well. Large amount of users lead to high throughput network traffic which causes issues like concurrency, consistancy, availablity. So engineers need to implement various functions for users and it should probably be some kind of complex system. To be a high-level engineer, one should have strong knowledge of handling heavy traffic and huge amounts of data. And engineers also need to be familiar with some common design patterns and some essential components, which will help one to build the most efficient system with less time and resources.

Fundamentals of System Design

Scaling

Scaling is the ability to adjust our services to meet workload changes, which would make sure that the services have enough resources to process the users request without any waste. There are typically two kinds of scaling:

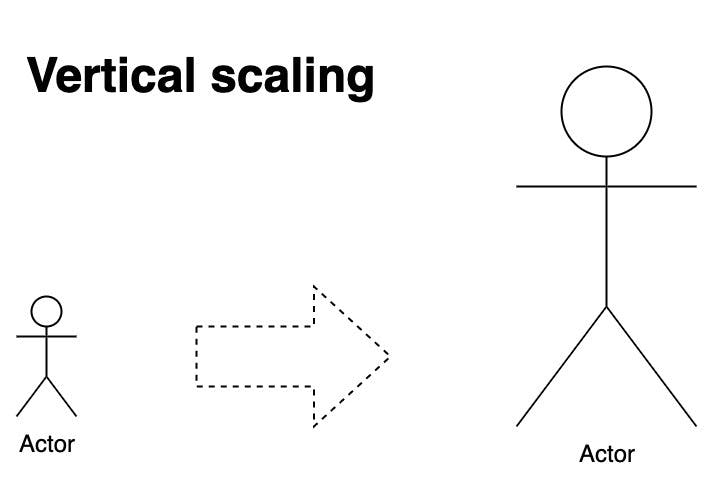

Vertical scaling

Vertical scaling means increasing the service instance itself so that it becomes more powerful and can handle a much heavier workload. Or on the contrary, decreasing itself when the workload is not so heavy any more so that there will be less waste.

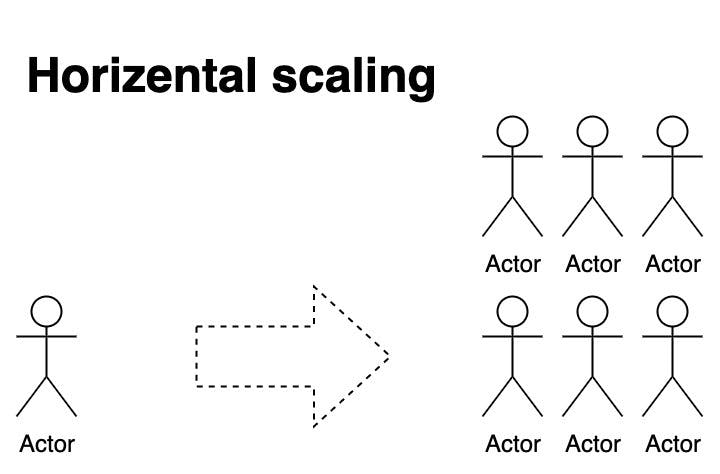

Horizental scaling

Another method to adjust services for the upcoming workload change is to increase the number of instances, then there will be more instances to bear the heavy workloads. In most situations, horizental scaling is the best practice because it is more flexible and safe. For example, when scaling horizental, the old instance can keep running without any stop, and only new tracffics will be handled by new instances.

horizontal-scaling

CAP Theorem

The CAP Theorem is a fundamental theorem in system design. It gives three important properties(Availability, Consistance and Partition Tolerance) to measure a distributed system and states that a distributed system can only provide good performance on two of the three properties simultaneously. That indicates there must be some tradeoffs between the three properties. More detail about CAP Theorem.

CAP Theorem

State management

In the real world, all the information we get currently is highly relevant to the past, and what we do now will continue to imfact the future. So, that is the state which will keep changing based on its past. In my opinion, all of the complexity came from the state. We work on retriving users history data as fast as possible, we work on preventing users’ data from being lost in case of software or hardware failure, we work on ensuring the data is consistent. On the contrary, when we handle a system that is state-less, it will be much easier.

Actually, the data is the state I discussed above. So, how we transmit, process and store data is actually how we handle the state.

Data transmission

We usually use some kind of network protocol to transmit data from one place to another, so we need to be familiar with TCP/IP, HTTP, UDP, webSocket etc. And we also should know how to use RPC to make the communication between services more efficient and robust.

Storages

Storages are used to do state persistence. Although in our practice the main storage medium is disk, there are many different methods to do the work in different situations. There are some kinds of storage methods.

Block Storage

Block storage is a technology that cuts the data into small blocks with a fixed size, and then stores the fixed block on physical storage such as a hard disk, and finally gives a distinct ID for each block to locate and access it. The biggest advantage of block storage is extremely low latancy on accessing and retrieving. Block Storage is widely used in many kinds of relational or transactional databases, time-series databases and containers etc. Learn more about Block Storage

File Storage

File storage is a hierarchical storage method. With this method, data is stored in files. Files are stored in directories which are still under some other directories. This storage method works well for limited amounts of data. When it comes to large amounts of files, accessing and retriving files becomes time-consuming. Learn more about File Storage.

Object Storage

Object storage is designed to handle very large amounts of unstructured data. It has a great advantage of dynamic scalability. We usually use Object storage via rest api instead of system level APIs. Object storage can provide immense or even infinite storage volume. So it is often used for archieving, backuping and logging. Learn more about Object Storage.

Message Queues

Message queue(short as MQ) is also a kind of database. The difference is that the MQ acts like a real queue or pipe. Data is poured in by an application and then be consumed asyncally by another appliction. There are some largely used message queue products: Kafka, RocketMQ, RabbitMQ, Pulsar.

Distributed File System

File system is used for origanizing data in files which would be put under some directories on disk. It is a great interface between users and hard disks. Distributed file system acts like a file system but it is built on top of legacy file systems, typically via network. Distributed file systems have the ability to scale infinitely, in theory. And it could keep one or more backups of any data in different physical storage, which can prevent data from being lost due to hardware failures.

HDFS maybe the most widely used distributed file system, and it is surely the base of the Hadoop ecosystem. Learn more about HDFS.

System design patterns

There are some commonly used design patterns that we developers should be familiar with.

Bitmap & Bloom Filters

Bitmap is actually a bit array. It is used to map an integer to the index of the bit array, and the value on that index can only be 0 or 1, which can be used to represent the extra information of that integer. A typical usage of bitmap is Bloom Filter. Bloom Filter uses one or more bitmaps. Each bit in a bitmap represents the existence of an item. Usually, if an item that is hashed into or identified by an integer exists, the corresponding bit of index that is same with the item ID will be set to 1, or else it will remain 0. After that, we can then check if an item exists efficiently only by checking if the corresponding bit is 1. To reduce the size of the bitmap, Bloom Filter uses two or more hash functions to do the same thing. Learn more about Bloom Filter.

Consistent Hashing

A hash function can map data of arbitrary size to some fixed-size values. The mapped value is usually numeric and is used to index the original data. Consistent Hashing is used to map data to some storage nodes and make sure that only a small set of data needs to be transmited when there are nodes added in or removed out. With consistent hashing, nodes used to store data are arranged like a ring, each node keeps a range of numbers as it slots. Then each data item is hashed into a number as its key, which is then mapped to a slot in the ring. After that, if some node is going to be removed from the ring, only slots of the corresponding node should be transmited to some other nodes. Consistent Hashing is important in distributed systems and works closely with data partitioning and replicating. Learn more about Consistent Hashing.

ACID & Distributed Transaction

Strictly speaking, a transaction must be atomic, consistent, isolated and durable, which we call ACID.

Atomicity: operations in a transaction should be either all executed or none of them be executed.

Consistency: only valid data following all the rules and constrians can be written into database.

Isolation: it should be guaranteed that one transaction should not affect or be affected by other transactions.

Durability: the final state of the transaction must be stored immediately after commitment in case of subsequent failures.

A distributed transaction is a set of operations that should be performed across two or more processes. It is typically coordinated across separate processes that are connected by network. The complexity comes from network issues such as latancy, timeout and bandwidth limitation. The most common known methods are 2-phase commit(2PC) and 3-phase commit(3PC).

Inverted index

When there are requirements to do a full-text search, developers would probably use the inverted index technique to origanize their data. The famous opensource full-text search engines are Elasticsearch, Solar, Sphinx. Learn more about Inverted Index.

Databases

Databases overview

In our applications, we don’t directly write and read data from disk. Usually we use a kind of database to organize data in a specific format and index data so that we can query with great efficiency. There are different types of database for different cases of use.

Ralational Database

Widely used in transactional systems, maybe the most popular type of database. Consistence is the most important feature.

MySQL, PostgreSQL, MS SQL Server, Oracle, MariaDB, SQLite

No-SQL Database

Unlike ralational database, data stored in No-SQL database sometimes has no fixed format/schema. So it usually allows dynamic schemas. Many databases can be classified as No-SQL databases, such as kv-store, kv-cache, document-store, graph db etc. There is a full list on Wikipedia.

Analytic Database

Used mainly for analysing large amounts of data. Read performance is the key feature. Many of them use column-oriented storage to avoid reading unwanted columns and increase the query speed.

Time-series Database

It can be thought of as a special analytic database which indexes its data mainly on time dimmension.

KV-Database

It is also named kv-store, and would be the basic store for some other kinds of database mentioned above. We use kv-store directly for high performance, maybe sometimes without persistence on disk but in memory, which makes it act as a cache.

How to choose a database

Database is the foundation of all software. Different databases provide different functions to meet different purposes. There are some typical scenarios listed below. However, in the practice process, which database we should use needs to be decided according to the data volume, read & write pattern, consistency requirements, data structure, budget, etc.

Strong Consistency Scenario

This is maybe the most common scenario in software development. The core data of systems like e-commerce transaction, payment, stock transaction, bank business usually have complex relations and must be stored reliably and consistently. So Relational databases like MySQL, PostgreSQL, MS SQL Server, Oracle, MariaDB, SQLite may be the best choices.

High-speed caching

Sometimes we need to store and retrive a tiny value identified by a specific key, and unable to accept too large latency, then we can use databases like Redis, Memcache, etc.

Analytic Scenario

This scenario is often reguarded as OLAP and requried by BI system. When doing analyses, there will be a large amount of data querying but less writing. And most of the time there will be some heavy computation for statistics and calculations. Databases like ClickHouse, Greenplum, Vertica, Presto, Impala would be the best choices.

Time-series data handling

Time-series databases(TSDB) are widely used in devops fields to store monitoring metrics which are born time based. In this scenario, users often query the data within a specified time range, so the time dimension is the base index field.

IOT events storing is another scenario that is time based. There are also some specially designed databases like MatrixDB, TDengine, etc.

There is a rank of Time-Series databases.

Graph Traversal Scenario

When doing analysis on social connections and some other similar data, the program usually needs to travel along edges and nodes. In order to maximize efficiency, we usually use Graph Database such as Neo4j and others listed in the ranking.